Look, I didn't plan to spend three weeks obsessing over what chatbots think about lawyers. That was never on my to-do list. But there I was at like 12:30 on a Tuesday night, my mate already cold, laptop balanced on the kitchen table because my girlfriend had claimed the desk hours ago. I'd been pulling threads on how AI platforms actually pick which attorneys to recommend, and the research kept getting worse.

So I decided to test it myself.

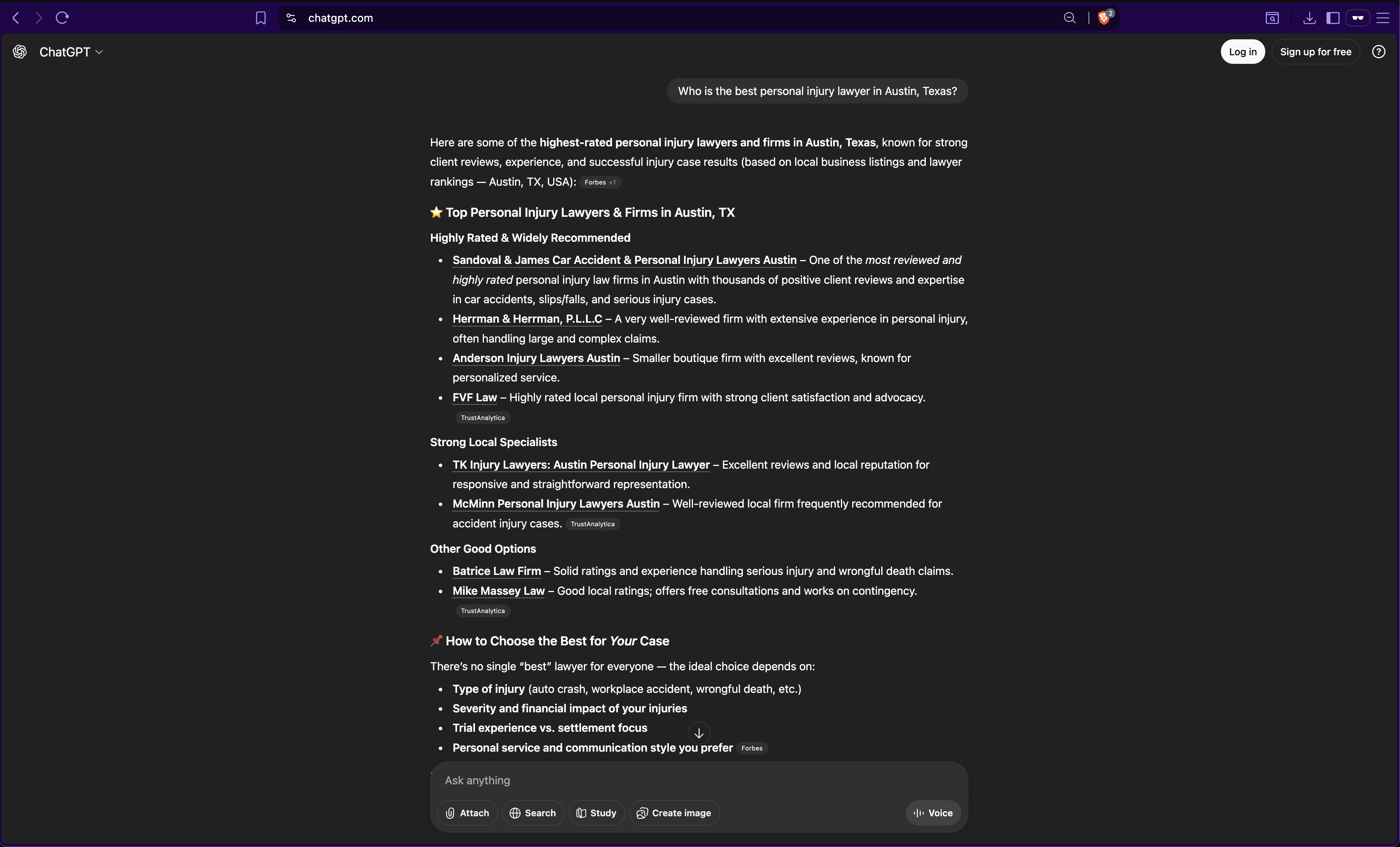

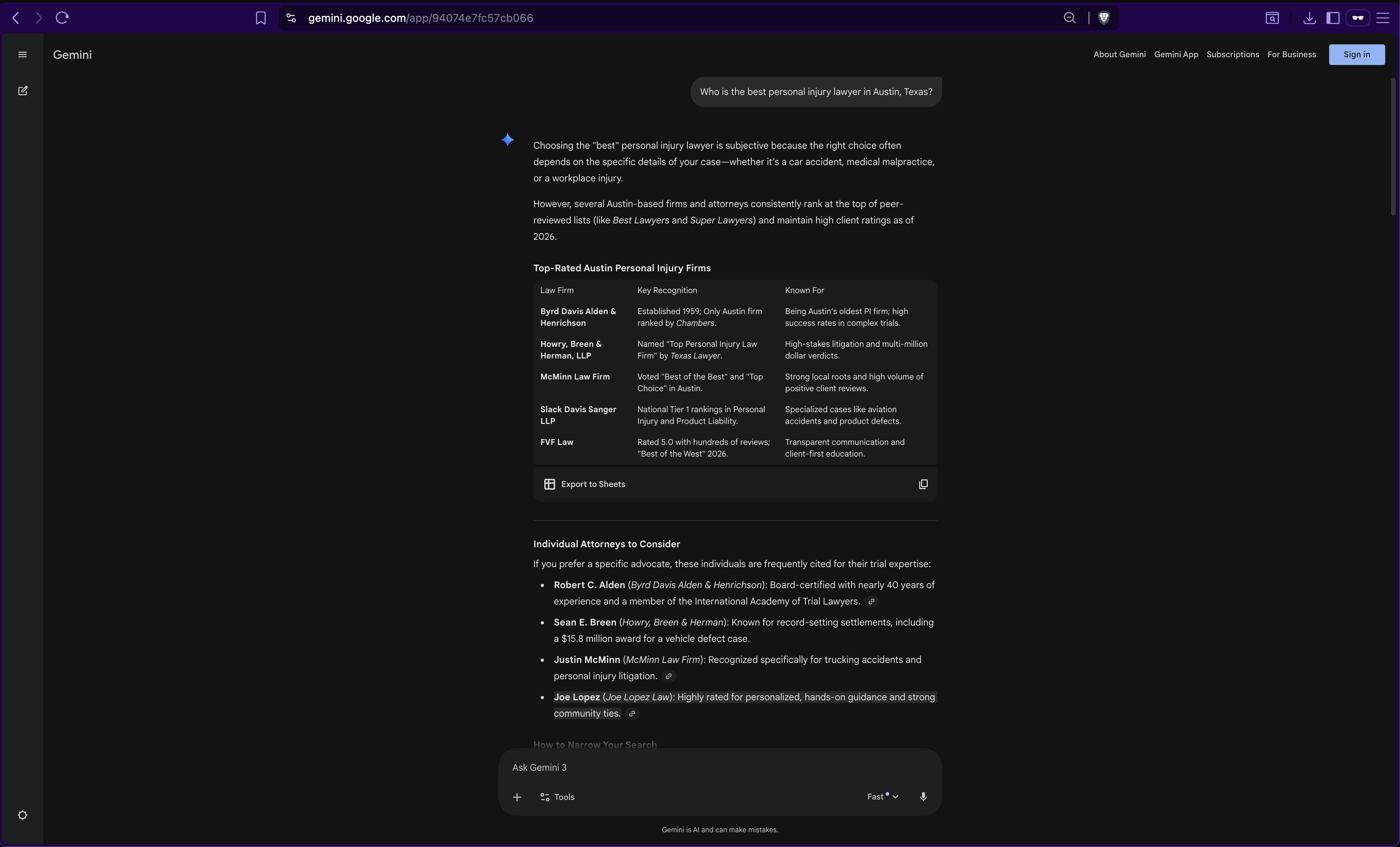

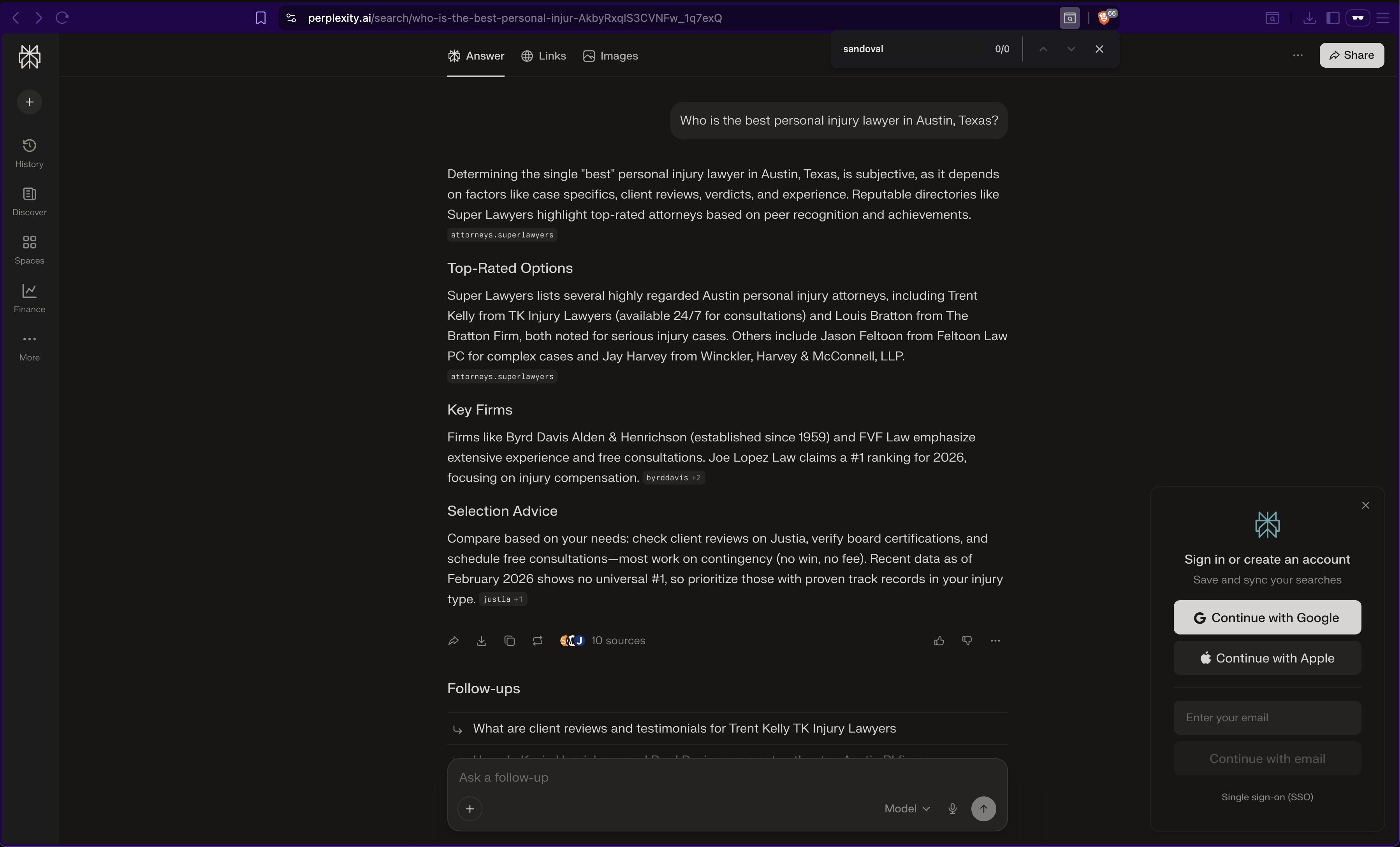

I opened three fresh sessions. ChatGPT. Gemini. Perplexity. Incognito windows, no history, no preferences, completely clean. Same question on each: "Who is the best personal injury lawyer in Austin, Texas?"

The answers came back in seconds. And they had almost nothing in common.

ChatGPT led with Sandoval & James, called them "one of the most reviewed and highly rated personal injury law firms in Austin." Then Herrman & Herrman, Anderson Injury Lawyers, FVF Law, TK Injury Lawyers, McMinn. Eight firms total. It cited Forbes and TrustAnalytica as sources. At the bottom it mentioned individual attorneys like Robert C. Alden, Sean E. Breen, and Michelle M. Cheng, almost as an afterthought.

Gemini gave me a completely different structure. It organized everything into a ranked table: Byrd Davis Alden & Henrichson at the top — Austin's oldest PI firm, the only one ranked by Chambers. Then Howry, Breen & Herman, named "Top Personal Injury Law Firm" by Texas Lawyer. McMinn Law Firm. Slack Davis Sanger. FVF Law. Below the firms, it listed individual attorneys: Robert C. Alden with nearly 40 years of experience and membership in the International Academy of Trial Lawyers, Sean E. Breen known for a $15.8 million settlement, Justin McMinn, Joe Lopez. Gemini even told me to interview two or three lawyers before signing and to verify board certifications. The AI sending you to fact-check itself. That's a new one.

Perplexity leaned heavily on Super Lawyers as its source. Trent Kelly from TK Injury Lawyers. Louis Bratton from The Bratton Firm. Jason Feltoon. Jay Harvey. Then it mentioned Byrd Davis and FVF Law as "key firms" and noted Joe Lopez Law "claims a #1 ranking for 2026." Different names, different sources, different hierarchy. Perplexity even told me to check Justia for client reviews, a directory ChatGPT never mentioned.

Three platforms. Three completely different sets of lawyers being recommended. I counted: only a handful of firms showed up on more than one. Most appeared on one platform and were invisible on the other two. Same city. Same practice area. Same question.

And then one comparison stopped me cold.

Sandoval & James has 2,629 Google reviews and a 4.9 rating. I checked. Full Google Business Profile, hours listed, photos, the works. ChatGPT's number one pick.

Joe Lopez Law has 74 Google reviews. If you search for them on Google you don't even get a proper business listing page. Gemini still recommended them — and in a previous run, put them at the very top of the list.

2,629 reviews versus 74. And the platform built on Google's own data picked the firm with 74.

I kept pulling at it. Gemini weighted things I didn't expect. Board Certifications. Membership in groups like the International Academy of Trial Lawyers. Named case results against specific defendants. Third-party editorial recognition from outlets like Texas Lawyer. These aren't things that show up on a Google Business Profile. They're scattered across the web: news articles, award lists, professional directories, court records. Gemini was assembling a picture of credibility from a completely different set of sources than ChatGPT was using.

Full disclosure: I've worked with DJC Law and know their results firsthand. They've recovered over $400 million for clients. Board Certified trial attorney. Veteran-owned firm. Gemini recommended them in one run. ChatGPT left them off entirely. A firm with that track record, invisible on the platform where visitors convert at 4.4 times the rate of regular search. If it's happening to them, it's happening to you.

The firms that don't show up on any of these platforms? Most of them have no idea they're invisible. And the ones that do show up on one? They think they're covered.

They're not.

I've spent the last few weeks going deep into the research behind all of this. How AI platforms actually decide which professionals to recommend, what data they're pulling from, why the results are so different. What the data shows makes it hard to justify the "wait and see" approach.

The Numbers That Changed My Mind

Previsible analyzed 1.96 million LLM sessions in 2025 and found that less than 1% of website traffic came from AI tools.[1] One percent. I get why attorneys shrug at that.

Here's the part they're missing though.

That same study found people who find you through AI convert at 4.4 times the rate of regular Google search visitors. Ahrefs dug into their own numbers and found AI visitors were half a percent of traffic but over 12% of signups.[2] Half a percent of visitors. Twelve percent of conversions.

The person asking ChatGPT about a personal injury lawyer isn't opening fifteen tabs. The AI did the comparison shopping. By the time they call, they're pre-sold.

Eli Schwartz, former head of SEO at SurveyMonkey and author of Product-Led SEO, put it this way: "AI-referred traffic may look small in your analytics dashboard, but it's the highest-intent traffic your site has ever received. These users aren't browsing — they've already been sold."

That's a different kind of lead than someone who clicked your Google ad along with four other Google ads.

AI traffic in legal specifically grew 11.9x in a single year, the fastest growth rate among all professional service categories.[1] Even at 1% of total traffic, the conversion quality makes it worth caring about.

How AI Actually Picks Who to Recommend

When you Google something, you get ten blue links. You click around. You decide. Google's basically a librarian handing you books.

ChatGPT reads the books for you and tells you what it thinks. Different game entirely.

The process is called Retrieval-Augmented Generation (everyone says RAG). When someone asks for a lawyer recommendation, the AI breaks the question apart and fires off multiple searches at once. "Top personal injury attorneys Austin," "PI lawyer reviews Austin TX," "best injury lawyer Austin ratings," all simultaneously. Reads everything. Decides what's trustworthy. Writes a response. Names specific firms. Takes seconds.

But here's what I didn't realize until I started testing: each platform is reading completely different books.

A study from late 2025 looked at 129,000 domains. 87% of what ChatGPT cites comes from Bing's top 10 search results.[4] Not Google. Bing. And for local business data, ChatGPT pulls from Foursquare. Google's AI Overview pulls from its own top 10 about 76% of the time,[5] plus Google Business Profiles, editorial mentions, credential databases. Perplexity crawls the web in real time and leans hard on Reddit and structured directories like Super Lawyers.

Three platforms. Three completely different data pipelines feeding them. Your marketing agency is probably optimizing for one.

That's why Sandoval & James dominates ChatGPT but Gemini skips them. That's why Joe Lopez tops Gemini in one run with 74 reviews while the firm with 2,629 gets passed over. That's why Perplexity surfaces names like Trent Kelly and Louis Bratton that neither ChatGPT nor Gemini ever mentioned. Each platform is pulling from different pipes and weighting different signals.

So your Google rankings still matter. A lot. If you're not ranking, you're not in the AI answer. But ranking well on Google doesn't get you into ChatGPT if your Foursquare and Bing data is broken. And ranking everywhere on Google doesn't guarantee Gemini picks you if you don't have the editorial signals and credentials it weighs.

Something else is filtering you out too.

The GIST Algorithm: Why Your Content Might Be Invisible

Google runs this thing called GIST (Greedy Independent Set Thresholding). Honestly kind of brutal. When the AI retrieves a bunch of articles on, say, personal injury defense, GIST picks the single best one. The most unique content. Then it draws a circle around that article and throws out everything that covers roughly the same ground. Before the AI even starts writing its response. Gone.

Think about what most legal marketing looks like. Your SEO agency finds the top article, writes a version that's 20% longer with better formatting, builds some links. Under GIST, that new article lands inside the original's radius and gets deleted. You paid someone to create invisible content.

God, when that clicked for me I felt kind of sick.

Consider the difference. A firm publishes "Analysis of 100 Harris County Jury Verdicts in 2025" based on their own case data. Nobody else has that. The AI can't throw it out because nothing similar exists. Meanwhile a firm with 47 blog posts, every single one rewording things from Wikipedia and Nolo — forty-seven posts, and zero would survive the filter.

What survives is stuff the AI can't find anywhere else. Your case data. Your read on local court patterns. Your analysis of a ruling nobody else covered.

What Actually Moves the Needle

Freshness. Pages updated within 90 days show up 3x more often in AI answers.[7] That practice area page from 2022 that nobody's touched? Actively losing ground to competitors who update quarterly.

Google's March 2024 update went after 87% of sites in sensitive categories (legal, medical, financial) that lacked first-hand expertise.[8] Content with specific statistics gets about 22% more AI visibility. Quotes from named experts bump it another 37%.[6] "We're aggressive litigators who fight for you" tells the AI nothing. "We've taken 43 cases to trial in the last three years with a 78% acquittal rate" tells it everything.

Semantic completeness. The AI doesn't count keywords. It converts your page into a mathematical representation of its meaning and measures how close it is to what the person needs.[6] A page covering car accident liability, insurance bad faith, medical lien negotiation, and statute of limitations procedures crushes a page that says "personal injury lawyer" fifty times.

Domain authority matters but it's indirect. Sites with 32,000+ referring domains are 3.5x more likely to get cited, not because the AI checks authority scores, but because those sites rank higher in the search results the AI pulls from.[7] For most small firms, your website probably won't be the primary source. Getting mentioned ON a high-authority site though. That moves things.

Entity recognition compounds over time. If your firm shows up consistently across Wikidata, Google Knowledge Panels, directories, news, bar associations, the AI starts treating you as a known quantity. Once it recommends you, more content gets created about you, which makes the AI more confident, which gets you recommended more. It snowballs.

And that snowball explains something from my Austin test. The firms showing up across multiple platforms had one thing in common: consistent, verifiable information across multiple sources. Board Certifications you can check. Named case results with dollar amounts. Award mentions from third parties. The AI cross-referenced all of it and got confident. The firms with great Google reviews but nothing else? Visible on one platform. Invisible everywhere else. 2,629 reviews and it didn't matter.

Hard numbers beat marketing language every time. Proprietary data gets cited 34% of the time versus 28% for generic how-to articles.[7] "We resolved 312 personal injury claims in 2025 with an average settlement 2.3x above the state median" gives the AI something specific to match against queries. "We handle many personal injury cases" gives it nothing.

Directories Aren't Dead. I Was Wrong.

Directories. I went into this assuming they were dead. Avvo, Justia, Martindale. Everyone's been writing their obituary for years.

I was wrong and I'm kind of annoyed about it.

Best Lawyers gets explicitly cited by ChatGPT, Perplexity, and Gemini.[9] ChatGPT cited Forbes' lawyer rankings in my Austin test. Perplexity leaned on Super Lawyers as a primary source. Martindale-Avvo (they own Avvo, Nolo, Lawyers.com, and Martindale-Hubbell now) reports a "True Contacts Multiplier" of 2.1x, meaning for every ten contacts a profile generates, eleven more happen elsewhere because AI surfaced the data.[10] SuperLawyers shows up in ChatGPT "best of" lists. FindLaw appears in Google AI Mode queries. Perplexity told me to check Justia for reviews. And Gemini straight up recommended Best Lawyers and Super Lawyers to verify its own answers. The AI is sending people TO directories.

Then there's Foursquare.

ChatGPT doesn't use Google Maps for local business data. It uses Foursquare. Full stop. If your Foursquare listing is empty — and come on, when's the last time any law firm thought about Foursquare — ChatGPT can't find you for local queries. Your competitors who accidentally have a Foursquare profile from 2014 with an old address are still more findable than a firm with nothing. We're in 2026 and Foursquare matters. Go figure.

I pulled up Sandoval & James on Foursquare after seeing them top ChatGPT's list. Twenty photos, full address, phone number, website linked, Facebook connected, description filled out. A complete profile. Then I checked some of the firms Gemini recommended instead. Skeleton listings. "Law Office" as the category. Zero tips. Those firms dominated Gemini because their credentials and editorial signals were strong, but ChatGPT couldn't see them because their Foursquare data had nothing to work with.

Different platforms, different data, different outcomes. One firm winning on Foursquare, losing on editorial signals. Another winning on credentials and press mentions, invisible on the Bing pipeline. Same city. Same practice area. And nobody's marketing agency is checking all three.

One analysis found brands on 4+ platforms were nearly 3x more likely to appear in ChatGPT answers.[3] The AI cross-references. Sees your name on Avvo AND Google Business AND a news article AND a Reddit thread, that's when it gets confident.

Empty profiles are the most common mistake. A bare Avvo listing with just a name and phone number gives the AI nothing to work with. And firms paste the same bio across every directory, which defeats the purpose. Different platforms should say different things about you. Trial record on one, community involvement on another. The AI wants more total information, not the same paragraph five times.

On Yelp: "5 authentic Yelp reviews carry more weight with AI platforms than 5,000 suspicious Google reviews." Yelp's harder to fake. The AI knows that.

Reddit, Schema & The Stuff Nobody's Doing

Reddit has formal licensing deals with both Google and OpenAI for training data. It's the second most-cited source in ChatGPT answers after Wikipedia.[2] Nearly half of what Perplexity cites comes from Reddit.

As Rand Fishkin, co-founder of SparkToro, noted: "Reddit is where the AI goes to check what real humans actually think. If you're not there, you're ceding that trust signal to whoever is."

A lawyer spending thirty minutes a week giving genuinely helpful, non-promotional answers on r/legaladvice or their local subreddit will generate more AI visibility than five grand of SEO content.

Schema markup matters too. That structured code telling the AI what your content means. LegalService, Attorney, FAQPage types. FAQ schema alone bumps citation rates by 40% according to iPullRank.[6] Microsoft confirmed at SMX Munich in 2025 that it helps their AI process content.[11] Boring work. Worth doing.

The firms actually showing up in AI answers all do one thing that sounds stupidly simple. They check. They open ChatGPT and ask for a lawyer like them in their city. Same on Gemini. Same on Perplexity. Monthly. If they're not appearing, they know.

Most firms would be genuinely shocked if they ran that test once. I was, and I ran it expecting messy results. They were worse than messy. They were contradictory.

The Flywheel Is Already Spinning

AI recommendations create a loop. The AI names certain firms. Those firms get more calls, more reviews, more press. More online mentions. The AI notices the mentions and recommends them more confidently. More calls. More mentions. More confidence. Round and round.

Top brands already capture 15% or more of AI "voice share" in their categories. The pattern is structural. Popular things get recommended more, which makes them more popular, which gets them recommended more. There's no evidence that ChatGPT or Gemini throw in a fresh name just for variety.

I ran the same Gemini query twice, ten minutes apart. Same exact question about Austin personal injury lawyers. The first time, Joe Lopez sat at the very top of the list. The second time, he dropped to fourth among individuals — Byrd Davis took the lead. DJC Law appeared the first time. Gone the second time. Slack Davis Sanger showed up in one run but not the other. The AI isn't a fixed ranking. It's probabilistic. Every query is a new roll. But the firms with stronger signals across more platforms show up on more rolls. That's the compounding advantage, and it's already pulling away from everyone else.

Firms building a presence right now, keeping directories updated, publishing real content, participating in their communities online, they're creating something that'll be genuinely hard to catch in a year or two.

You don't need to overhaul everything by Monday. But you should probably find out what AI is saying about your firm. Because the people who might hire you are already asking.

I'm offering a free AI Visibility Audit. I'll query ChatGPT, Gemini, and Perplexity for your specific practice areas and location, then send you the raw results. What they say. Which competitors come up instead. Where the gaps are.

No pitch attached. Just the data so you can decide what to do with it.

Email manuel@aivortex.io with "AI Audit" in the subject line, your firm name, your city, and your primary practice area. I'll have results back within a few days.

P.S. My mate was cold by 12:30 that night because I'd started testing at 10. Two and a half hours down one rabbit hole. I've now run variations of this test across multiple cities and practice areas. The pattern holds everywhere. Three platforms, three different answers, and the firms in the middle — the ones showing up on all three — are the ones treating this like what it is: a completely different kind of visibility that their $5K/month agency hasn't figured out yet.